Project Information

- Project Type: Individual

- Project Duration: April 2024 - June 2025

- Github: supungamlath/SNN_Pipeline

The NeuroFall project introduces a novel, energy-efficient system for human fall detection using Spiking Neural Networks (SNNs) and event-based vision, implemented on FPGA hardware. Conducted under the supervision of Dr. Chathuranga Hettiarachchi, this project follows a neuromorphic computing approach to address a critical safety need in elderly care.

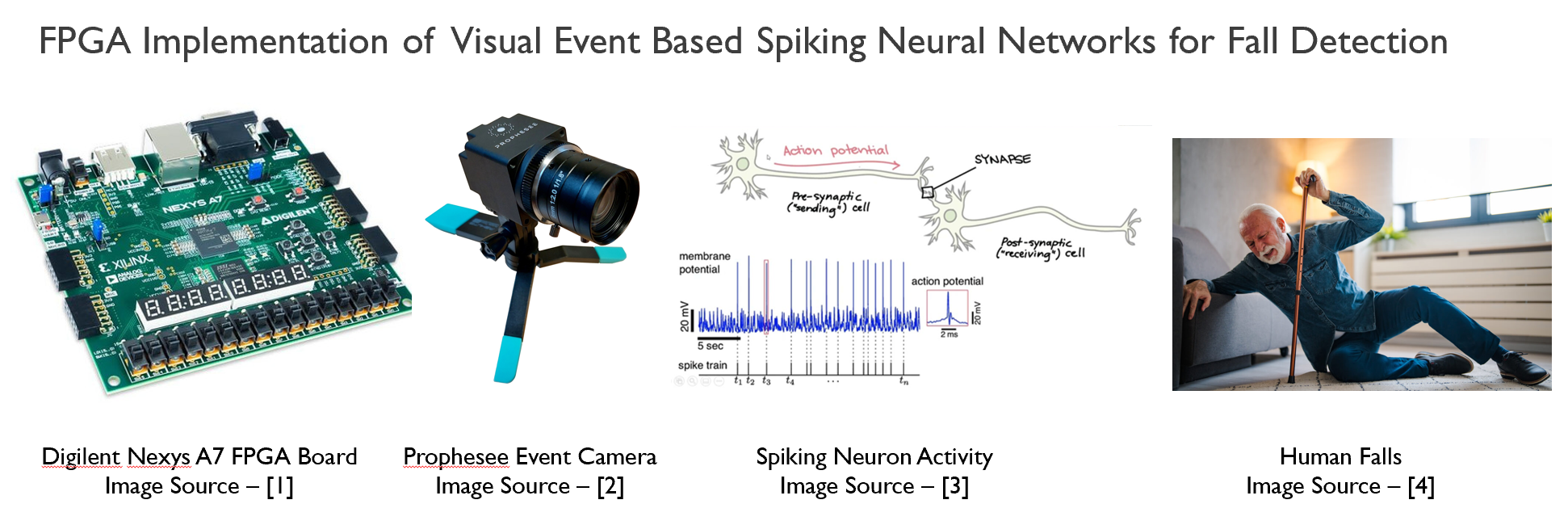

From Video to Events

At the start of the project, traditional frame-based video was converted to event-based data emulating Dynamic Vision Sensor (DVS) input. Using the UP-Fall dataset and tools like v2e and v2ce, RGB videos were converted into temporally precise streams of visual events. This conversion emulates the functioning of biological vision, enabling sparse and fast data representation, ideal for spiking models.

Designing and Training SNNs

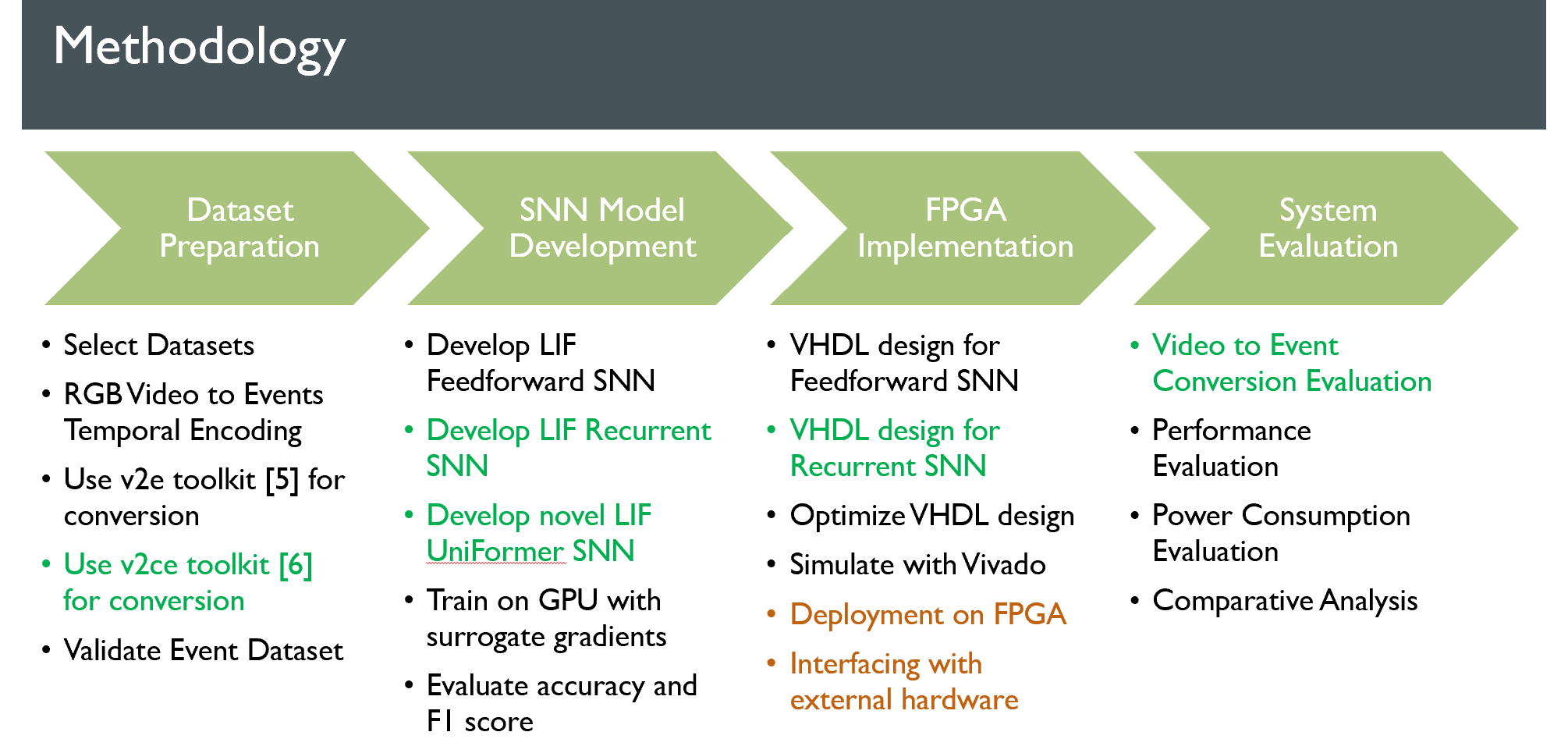

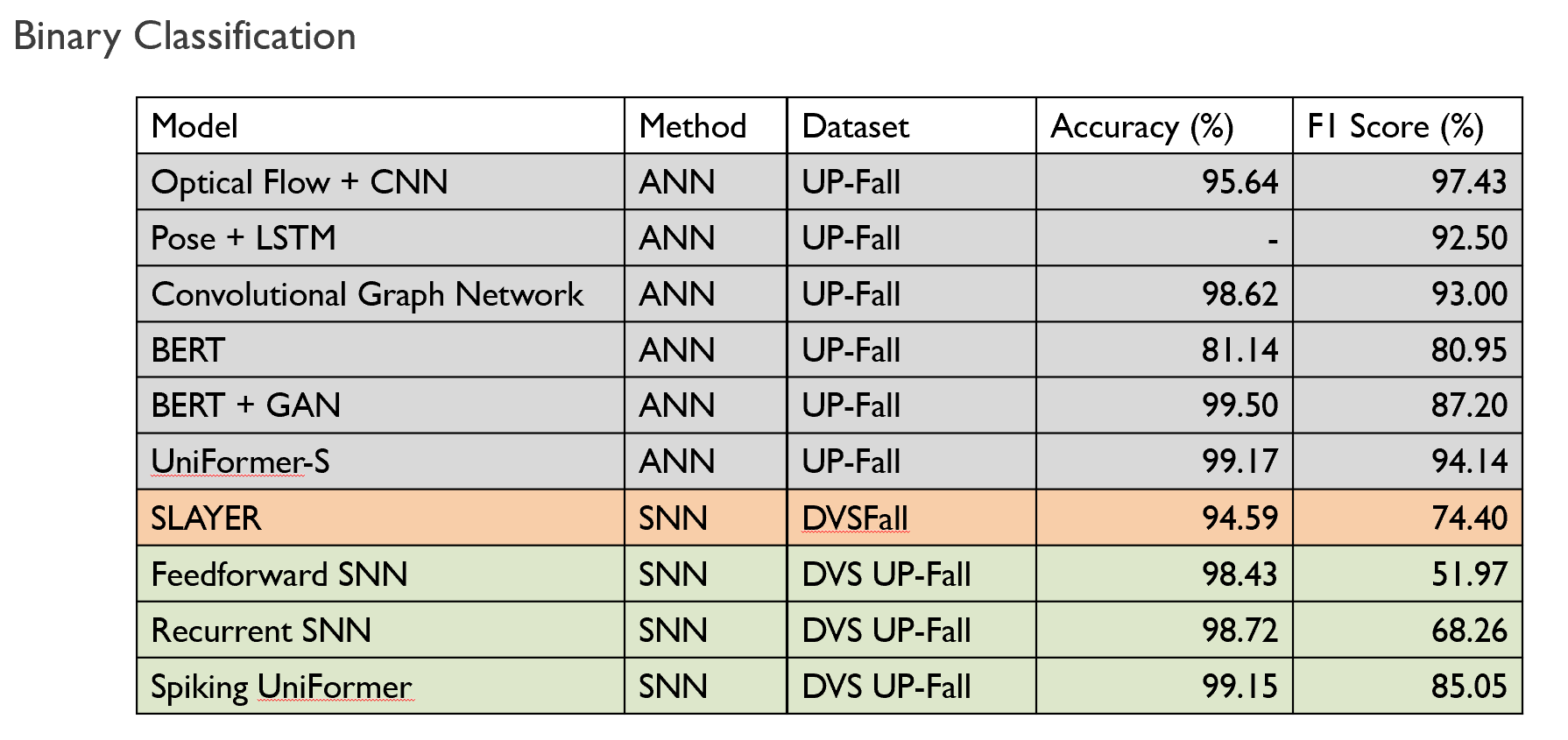

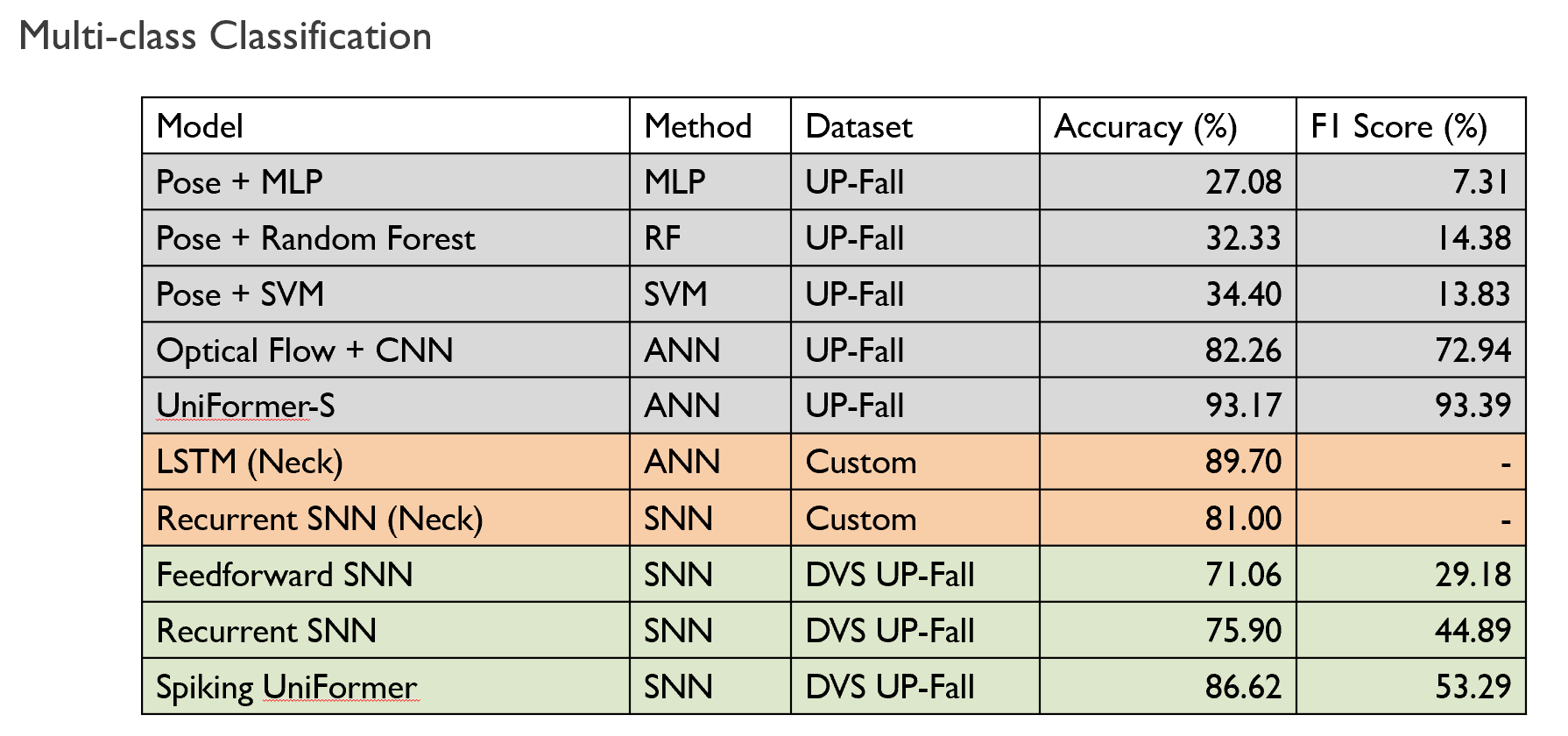

Three SNN architectures were developed:

- A Feedforward SNN using Leaky Integrate-and-Fire (LIF) neurons

- A Recurrent SNN with temporal memory

- A novel Spiking UniFormer featuring spiking self-attention

Efficient Hardware Deployment

To ensure real-world applicability, models were synthesized and deployed on the Digilent Nexys A7 FPGA board using VHDL. With quantization techniques, models were compressed significantly—down to 4-bit representations—while preserving accuracy. The hardware design featured state machine control, serial input interfaces, and optimized memory usage.

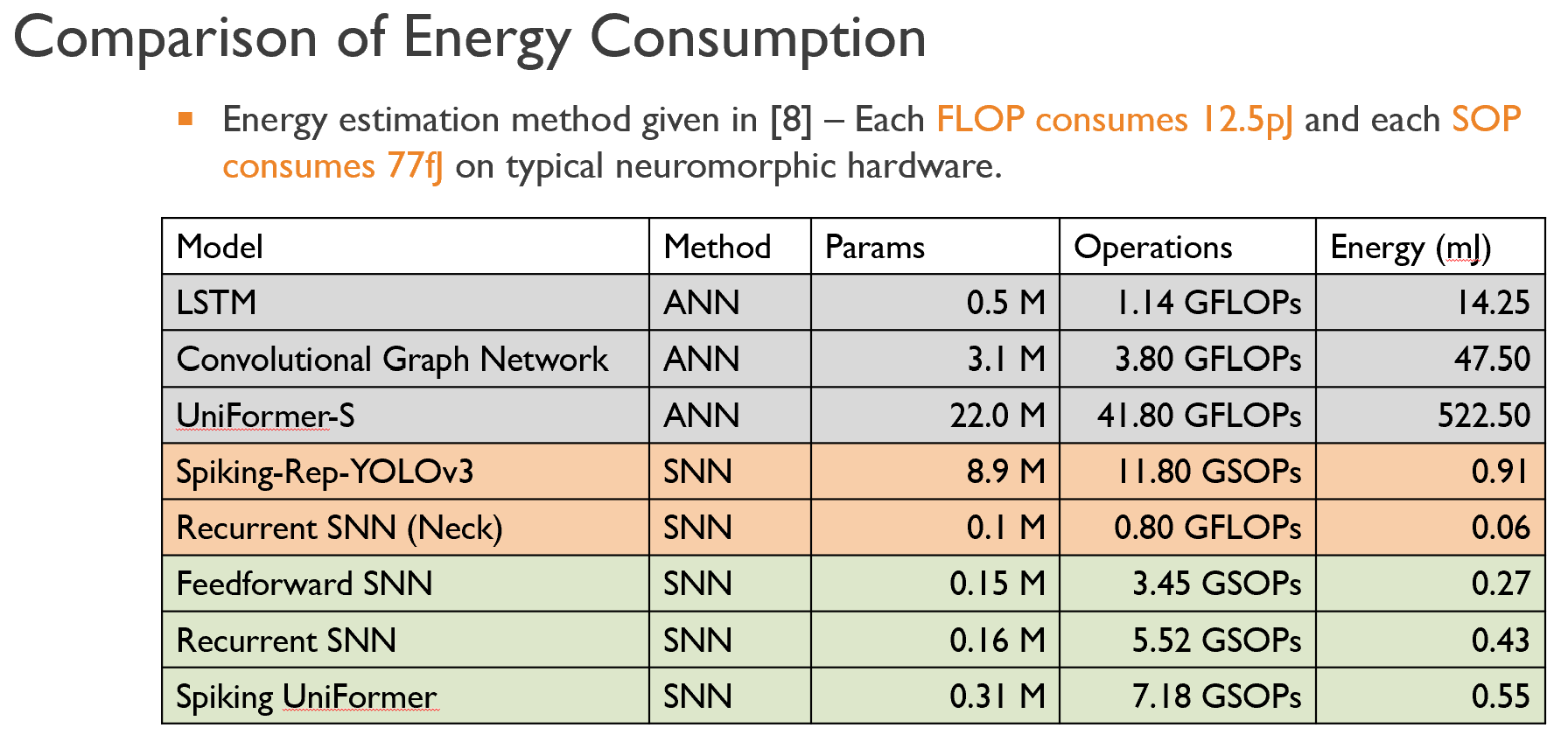

Energy Advantage

Compared to traditional deep learning models, SNN implementations demonstrated drastically lower energy consumption. For instance, the Spiking UniFormer used only 0.55 mJ, vastly lower than the 522.5 mJ consumed by the UniFormer-S ANN. This highlights the potential of neuromorphic computing for low-power, edge-deployed AI systems.

Deliverables

The project produced:

- A custom event-based fall dataset

- Trained SNN models with different hyper-parameters

- Hardware design for FPGA

- Three research publications